BrainX Waves: The Newsletter of BrainX Community

September 2025: Issue 14

Learn

Can AI lead to loss of skills amongst the healthcare workforce? Should we be concerned?

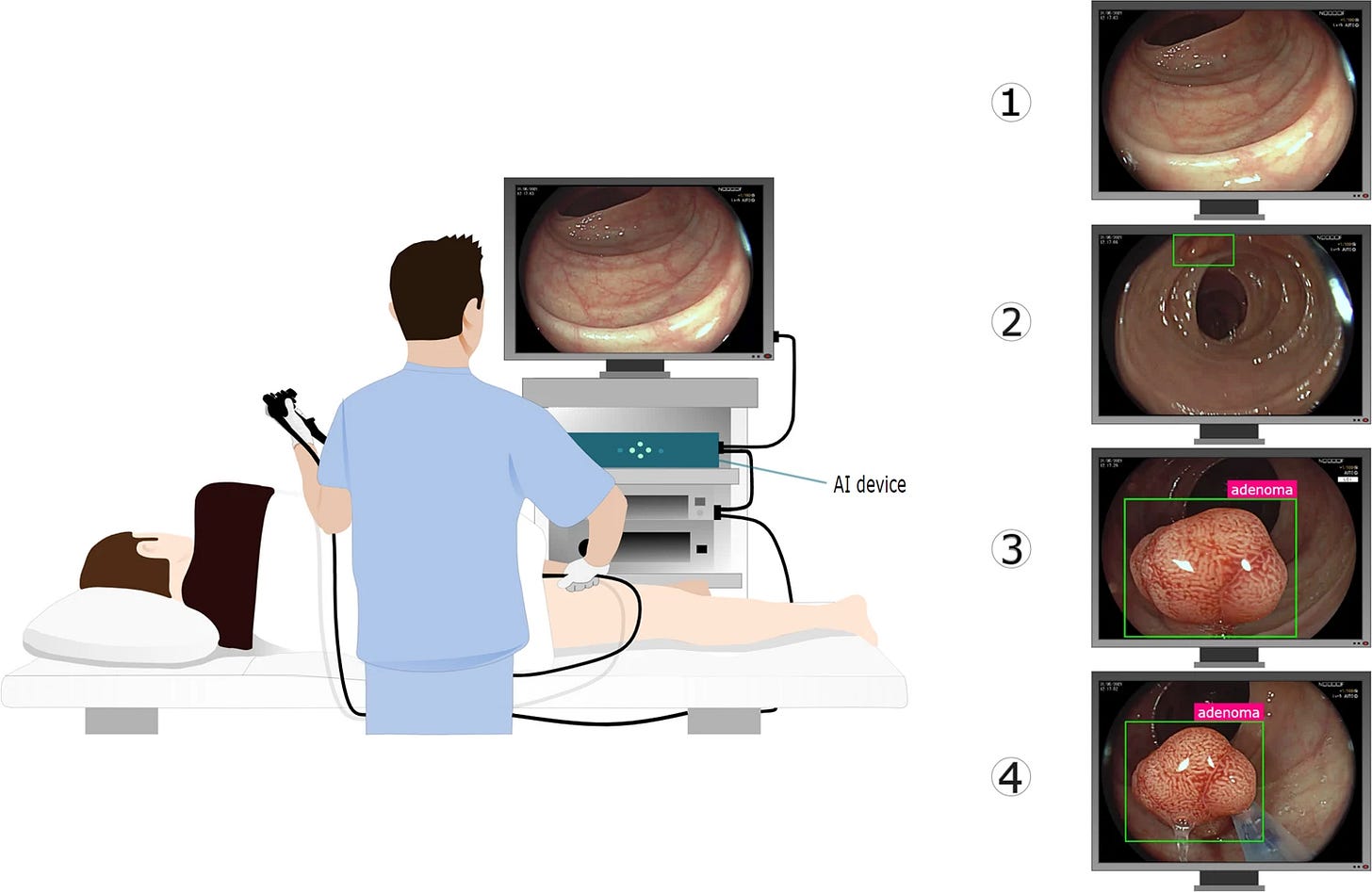

In a first-of-its-kind study, Marcin Romańczyk, et al., found a negative impact of regular AI use on healthcare professionals' ability to complete a patient-relevant task in medicine. The researchers conducted a retrospective, observational study at four endoscopy centres in Poland taking part in the ACCEPT (Artificial Intelligence in Colonoscopy for Cancer Prevention) trial. These centres introduced AI tools for polyp detection at the end of 2021, after which colonoscopies had been randomly assigned to be conducted with or without AI assistance according to the date of examination.

Amongst the colonoscopies performed between Sept 8, 2021, and March 9, 2022, 1443 patients underwent non-AI-assisted colonoscopy before (n=795) and after (n=648) the introduction of AI (median age 61 years [IQR 45–70], 847 [58·7%] female, 596 [41·3%] male). The adenoma detection rate (ADR) of standard colonoscopy decreased significantly from 28·4% (226 of 795) before to 22·4% (145 of 648) after exposure to AI, corresponding with an absolute difference of –6·0% (95% CI –10·5 to –1·6; p=0·0089). The authors concluded that continuous exposure to AI might reduce the ADR of standard non-AI-assisted colonoscopy, suggesting a negative effect on endoscopist behaviour.

It is clear that given the research, development and adoption of AI in medicine are rapidly spreading, this could be a cause of concern. Evolution in skills is a major part of any technological advancement. In prior studies, AI has also shown skill enhancement with the use of AI, such as performance of ultrasound for fetal age estimation or echocardiography. We need more research in this area to understand the impact of AI adoption in healthcare on skills and patient care delivery outcomes.

Reference publication: Endoscopist deskilling risk after exposure to artificial intelligence in colonoscopy: a multicentre, observational study. Budzyń, Krzysztof et al. The Lancet Gastroenterology & Hepatology

Connect

Register to join our upcoming BrainX Community Live event themed, ‘Reporting Guidelines for Generative AI’, on September 16, 2025; 11am - noon ET via Zoom for free. This event will feature Bright Huo MD & Giovanni Cacciamani MD, who will present the recent CHART reporting guidelines, and we will discuss other reporting guidelines for Generative AI research and publication.

Registration link: https://us02web.zoom.us/meeting/register/99GG_Vb6ReS2c_hAN-64GA#/registration

Program Agenda:

– Introduction

– “Reporting guidelines for chatbot health advice studies: the Chatbot Assessment Reporting Tool (CHART) statement”: Bright Huo MD; Giovanni Cacciamani MD

– Q&A

Related publications: Reporting guideline for chatbot health advice studies: the Chatbot Assessment Reporting Tool (CHART) statement. Chart Collaborative. BMJ Medicine. 2025;4:e001632.

Watch the video of our BrainX Community Live event themed: ‘Radiology platforms for AI implementation’, featuring Drs. Pranav Rajpurkar, Co-founder of a2z Radiology AI, and Vidur Mahajan, CEO for CARPL.ai.

Datasets (Open source)

Consumer-health-research:Personal Health Large Language Model (PH-LLM) dataset

All input data and associated expert responses for all 857 case studies in sleep and fitness used for training and evaluation, along with all model responses to the 100 holdout case studies, are stored in JSON format. All input and target prediction data, which include bucketized age, daily sensor readings for 20 sensors over 15 days and 16 binary survey responses for all 4,163 samples in the PRO task, are stored in JSON format.

FactEHR: A Benchmark for Fact Decomposition of Clinical Notes

FactEHR is a benchmark dataset designed to evaluate the ability of large language models (LLMs) to perform factual reasoning over clinical notes. It includes:

2,168 deidentified notes from multiple publicly available datasets

8,665 LLM-generated fact decompositions

987,266 entailment pairs evaluating precision and recall of facts

1,036 expert-annotated examples for evaluation

FactEHR supports LLM evaluation across tasks like information extraction, entailment classification, and model-as-a-judge reasoning.

Conferences

Additional BXC-featured publications

Generative AI/LLM

Apoorva Srinivasan; Jacob Berkowitz; Nadine A. Friedrich,et al

Generative AI/LLM

Generative Medical Event Models Improve with Scale

Shane Waxler, Paul Blazek, Rahul Shah, et al.

Endocrinology

Kevin M. Pantalone, Huijun Xiao, and Jeffrey I. Mechanick, et al.

Generative AI/LLM

Bright Huo and The CHART Collaborative

Generative AI/LLM

Fidelity of Medical Reasoning in Large Language Models

Suhana Bedi; Yixing Jiang; Philip Chung, et al

General/Generative AI/LLM

A personal health large language model for sleep and fitness coaching

Khasentino, J., Belyaeva, A., Liu, X. et al.

General/Generative AI/LLM

Evaluating Hospital Course Summarization by an Electronic Health Record–Based Large Language Model

William R. Small; Jonathan Austrian; Luke O’Donnell, et al.

Join and follow the BrainX community!

Webpage: https://brainxai.org/

Newsletter: https://brainxai.substack.com/subscribe

LinkedIn: https://www.linkedin.com/groups/13599549/

Youtube: https://www.youtube.com/channel/UCua5EiLL6I29hpNrJsdv1rg